GNNBleed: Inference Attacks to Unveil Private Edges in Graphs with Realistic Access to GNN Models

Authors: Zeyu Song (Penn State University), Ehsanul Kabir (Penn State University), Shagufta Mehnaz (Penn State University)

Volume: 2025

Issue: 3

Pages: 61–87

DOI: https://doi.org/10.56553/popets-2025-0089

Artifact: Available, Functional

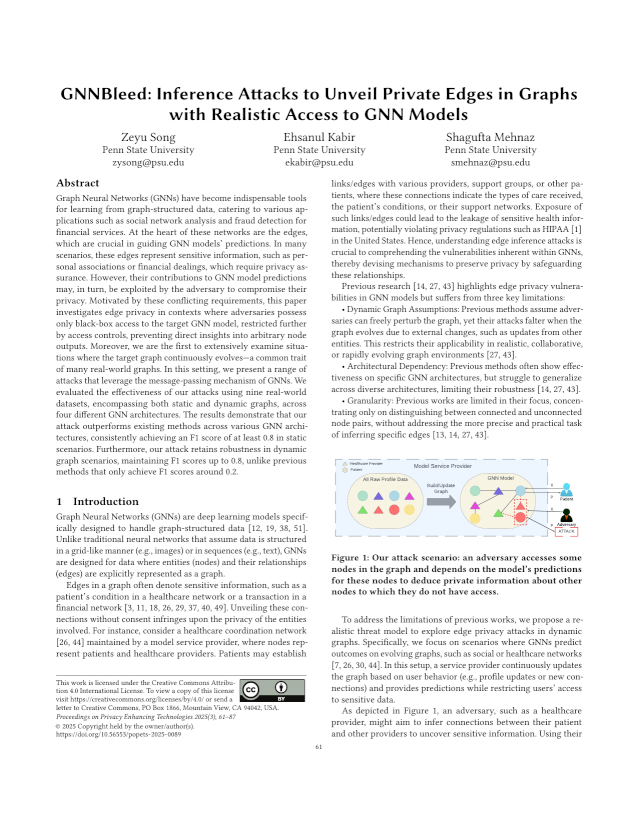

Abstract: Graph Neural Networks (GNNs) have become indispensable tools for learning from graph-structured data, catering to various applications such as social network analysis and fraud detection for financial services. At the heart of these networks are the edges, which are crucial in guiding GNN models' predictions. In many scenarios, these edges represent sensitive information, such as personal associations or financial dealings, which require privacy assurance. However, their contributions to GNN model predictions may, in turn, be exploited by the adversary to compromise their privacy. Motivated by these conflicting requirements, this paper investigates edge privacy in contexts where adversaries possess only black-box access to the target GNN model, restricted further by access controls, preventing direct insights into arbitrary node outputs. Moreover, we are the first to extensively examine situations where the target graph continuously evolves—a common trait of many real-world graphs. In this setting, we present a range of attacks that leverage the message-passing mechanism of GNNs. We evaluated the effectiveness of our attacks using nine real-world datasets, encompassing both static and dynamic graphs, across four different GNN architectures. The results demonstrate that our attack outperforms existing methods across various GNN architectures, consistently achieving an F1 score of at least 0.8 in static scenarios. Furthermore, our attack retains robustness in dynamic graph scenarios, maintaining F1 scores up to 0.8, unlike previous methods that only achieve F1 scores around 0.2.

Keywords: graph neural networks, graph privacy, edge inference attack

Copyright in PoPETs articles are held by their authors. This article is published under a Creative Commons Attribution 4.0 license.