AI-in-the-Loop: Privacy Preserving Real-Time Scam Detection and Conversational Scam-baiting by Leveraging LLMs and Federated Learning

Authors: Ismail Hossain (University of Texas at El Paso), Sai Puppala (Southern Illinois University Carbondale), Md Jahangir Alam (University of Texas at El Paso), Sajedul Talukder (University of Texas at El Paso)

Volume: 2026

Issue: 1

Pages: 87–114

DOI: https://doi.org/10.56553/popets-2026-0006

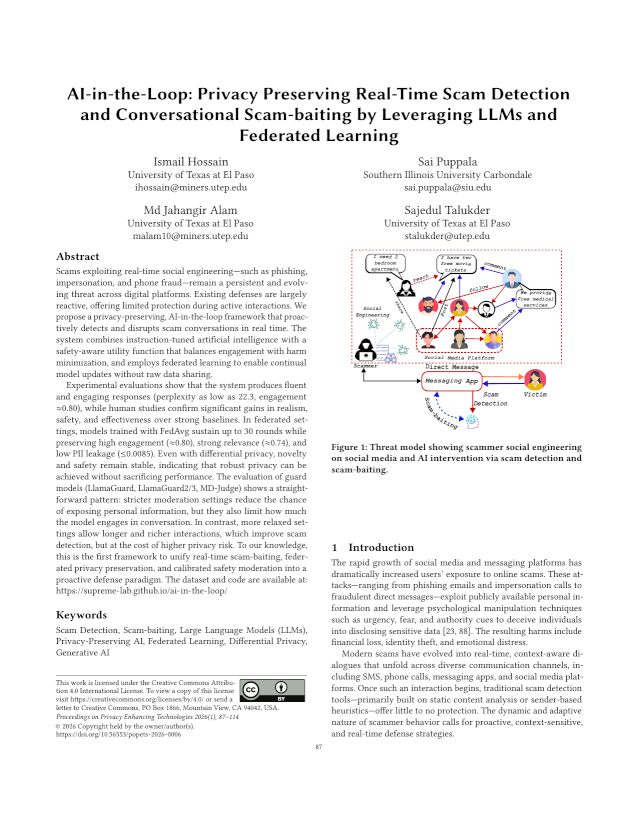

Abstract: Scams exploiting real-time social engineering—such as phishing, impersonation, and phone fraud—remain a persistent and evolving threat across digital platforms. Existing defenses are largely reactive, offering limited protection during active interactions. We propose a privacy-preserving, AI-in-the-loop framework that proactively detects and disrupts scam conversations in real time. The system combines instruction-tuned artificial intelligence with a safety-aware utility function that balances engagement with harm minimization, and employs federated learning to enable continual model updates without raw data sharing. Experimental evaluations show that the system produces fluent and engaging responses (perplexity as low as 22.3, engagement≈0.80), while human studies confirm significant gains in realism, safety, and effectiveness over strong baselines. In federated settings, models trained with FedAvg sustain up to 30 rounds while preserving high engagement (≈0.80), strong relevance (≈0.74), and low PII leakage (≤0.0085). Even with differential privacy, novelty and safety remain stable, indicating that robust privacy can be achieved without sacrificing performance. The evaluation of guard models (LlamaGuard, LlamaGuard2/3, MD-Judge) shows a straightforward pattern: stricter moderation settings reduce the chance of exposing personal information, but they also limit how much the model engages in conversation. In contrast, more relaxed settings allow longer and richer interactions, which improve scam detection, but at the cost of higher privacy risk. To our knowledge, this is the first framework to unify real-time scam-baiting, federated privacy preservation, and calibrated safety moderation into a proactive defense paradigm.

Keywords: Scam Detection, Scam-baiting, Large Language Models (LLMs), Privacy-Preserving AI, Federated Learning, Differential Privacy, Generative AI

Copyright in PoPETs articles are held by their authors. This article is published under a Creative Commons Attribution 4.0 license.